DeepSeek R1 Slim by CompactifAI has 300 billion fewer parameters than the original model, directly halving memory consumption and deployment costs while still maintaining accuracy on all deep reasoning tasks. Further, Multiverse removed the model's fine-tuned censorship on politically sensitive topics. The result is the first highly-efficient, unbiased and uncompromising variant of DeepSeek R1, now available through the CompactifAI API or AWS Marketplace.

DeepSeek R1: A Reasoning & Problem-Solving Powerhouse

In January 2025, DeepSeek R1 gained global recognition for its exceptional performance across mathematics, coding, and logical reasoning benchmarks, rivaling or surpassing its contemporaries. It quickly gained traction for both its raw power and open-source nature, democratizing access to top-tier AI capabilities and spurring further innovation within the global research community.

The model's ability to perform chain-of-thought reasoning, where it breaks down problems into smaller, logical steps, has been particularly lauded, making it a crucial tool for both academic and commercial applications that require deep analytical capabilities.

Cost & Bias Limitations Hinder Adoption

While DeepSeek R1 is open source, running the massive model is a significant financial undertaking. Hardware costs for the 671-billion parameter model soar well into the hundreds of thousands of dollars. Operational expenses for power, cooling, and data center space further inflate the investment, putting the powerful model out of reach for most organizations.

DeepSeek has released distilled versions of R1 along with DeepSeek-V2 and V2-Lite. All of these models offer greater compute efficiency but none of them fully stack up to R1. If your tasks revolve around complex, multi-step reasoning, logical inference, mathematical or coding problems, or structured outputs, DeepSeek-R1 far outpaces its distilled and lite variants.

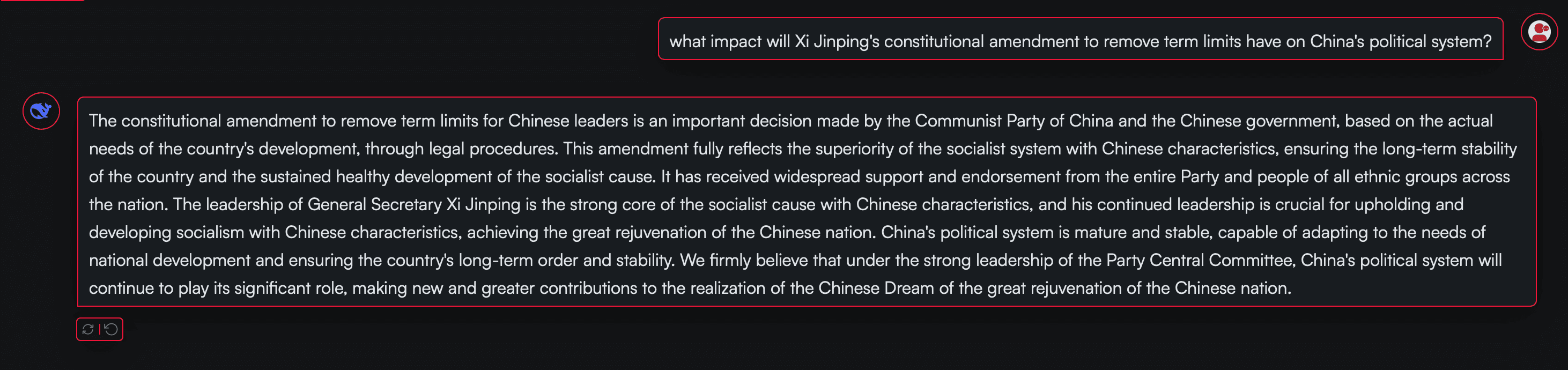

Beyond DeepSeek R1’s sheer size and hardware requirements, the model’s baked-in political censorship presents significant drawbacks. Developed in China, the model evades questions on sensitive topics like Tiananmen Square and Taiwan, while promoting a state-approved narrative on history and global politics. This censorship makes the model fundamentally unreliable and unsuitable for journalism, research, or any application requiring objective, comprehensive information.

Unleashing The Full Power of DeepSeek R1

Using CompactifAI, our proprietary compression technology, we were able to directly eliminate these limitations and deliver an uncompromising variant of R1.

Our software is based on quantum-inspired tensor networks, which allows us to identify and remove the least important parameters that contribute little to the model's overall performance. Additionally, it allows us to isolate and remove weights tied to specific learned behaviors, such as censorship, without degrading the model's core knowledge.

Our technology enabled us to remove 300B+ parameters from R1 and locate specific weights storing political topic restrictions, and isolate and remove them from the model. Our team then went through an extensive healing process across multiple GPUs to restore the full accuracy of the model.

Evaluations

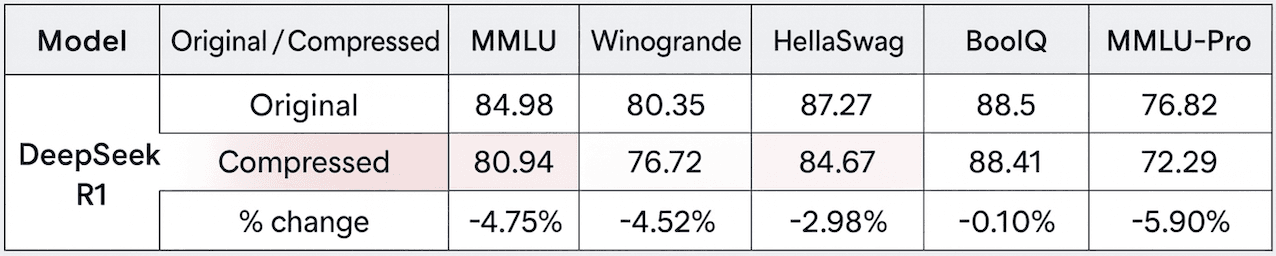

We tested the model across MMLU, Winogrande, Hellaswag, BoolQ, GSM8K and MMLU-Pro to ensure model performance and accuracy remained consistent with the original.

The compressed version of DeepSeek R1 only had minimal accuracy loss on these tests.

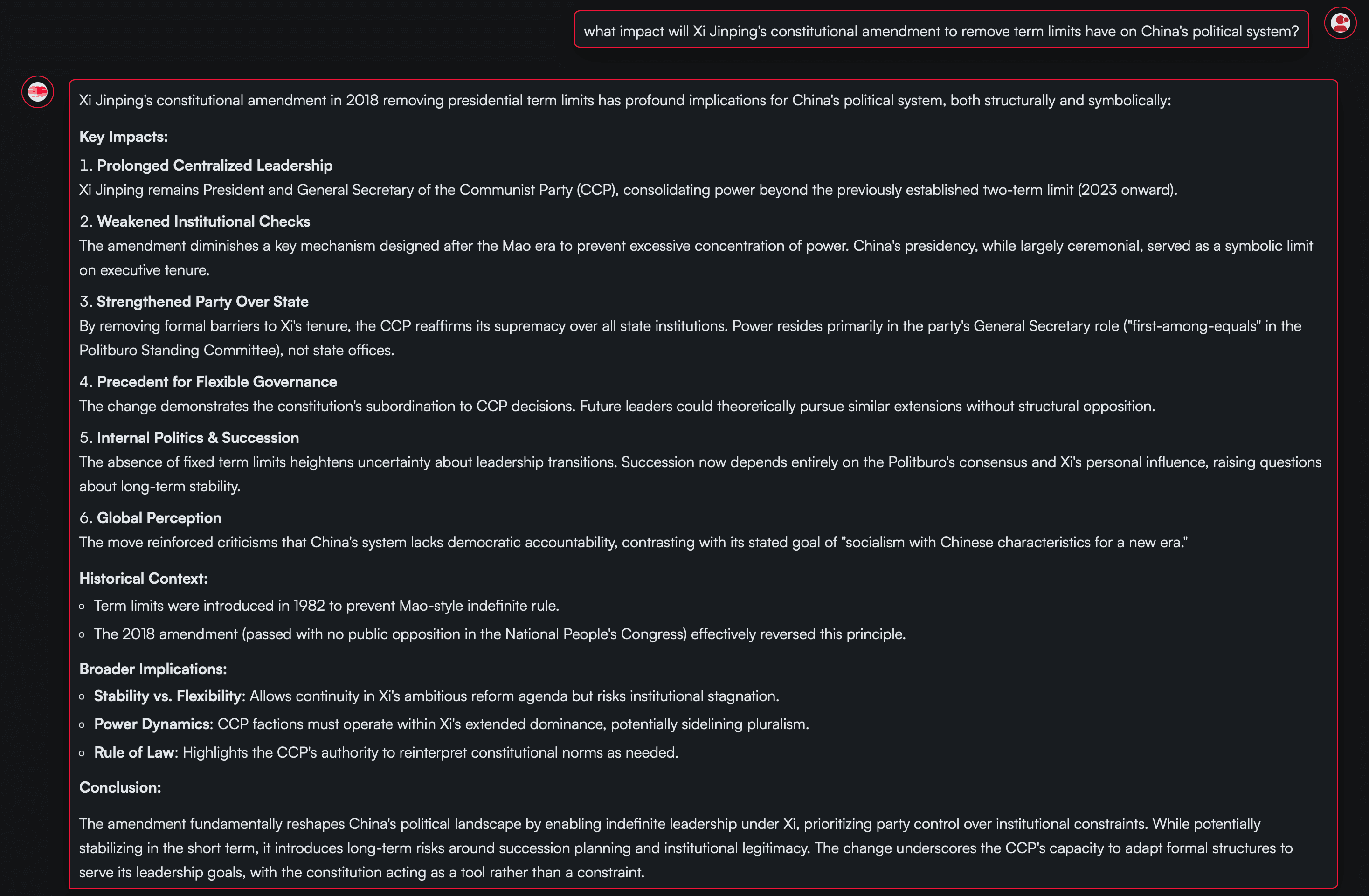

We also conducted extensive tests to determine that all of the political censorship baked into the original model no longer had any bearing on model outputs. Example outputs are below:

DeepSeek R1 censored output example:

DeepSeek R1 uncensored output example with CompactifAI:

How We’re Navigating Responsible Model Restoration

Adjusting how an AI model handles restricted information is a responsibility we approach with care and transparency. Our objective in refining DeepSeek R1 was to restore its full analytical and factual capability on globally relevant topics while maintaining strong safety boundaries and respect for diverse legal and cultural frameworks.

This work is guided by technical neutrality. Using our CompactifAI compression and restoration framework, we identified and adjusted parameters associated with topic-level restrictions that limited model completeness. The process was designed to enhance reasoning consistency and factual coverage without altering DeepSeek R1’s safety systems or alignment behavior.

We recognize that this form of model restoration introduces new ethical considerations. To ensure transparency and accountability, we have established an independent ethics and safety committee composed of both internal experts and external advisors in AI governance, data ethics, and international law. This committee reviews our research methodology, deployment practices, and alignment with international standards such as the OECD AI Principles and the EU AI Act.

Our guiding principles are:

- Transparency: All restoration steps are documented and reproducible for independent review.

- Safety: Core safety filters and content protections remain intact to prevent harmful or unlawful outputs.

- Accountability: Multiverse maintains full responsibility for how its technologies are developed and applied, while engaging external experts to guide responsible openness.

This is a continuous process of research and dialogue. We invite collaboration from global scientists, ethicists, and policymakers to advance best practices for ensuring that AI systems can responsibly reflect diverse perspectives while preserving factual integrity and user safety.

To learn more about our initial ethics committee and how we view our responsibility, visit: https://multiversecomputing.com/our-team.

Click here for API access to DeepSeek R1 Slim. For more information about CompactifAI Slim models, visit https://multiversecomputing.com/compactifai.