The Architecture Behind the Magic

At the heart of CompactifAI lies a federated model architecture that decides, intelligently and autonomously, which AI system should respond to you, local or cloud. Llama 3.1 Slim (Gilda), the on-device LLM, handles quick, lightweight, and privacy-sensitive queries. Running fully offline, it is your AI companion that does not need internet connection. DeepSeek R1 Slim, the cloud-based compressed model by CompactifAI, takes over when reasoning gets deeper or the question demands broader context. Overseeing both is CompactifAI Router, the orchestrator model that determines who should answer what. Think of it as a cognitive traffic controller, assessing each question’s complexity, privacy sensitivity, and latency tolerance before routing it to the right brain.

️How It Works

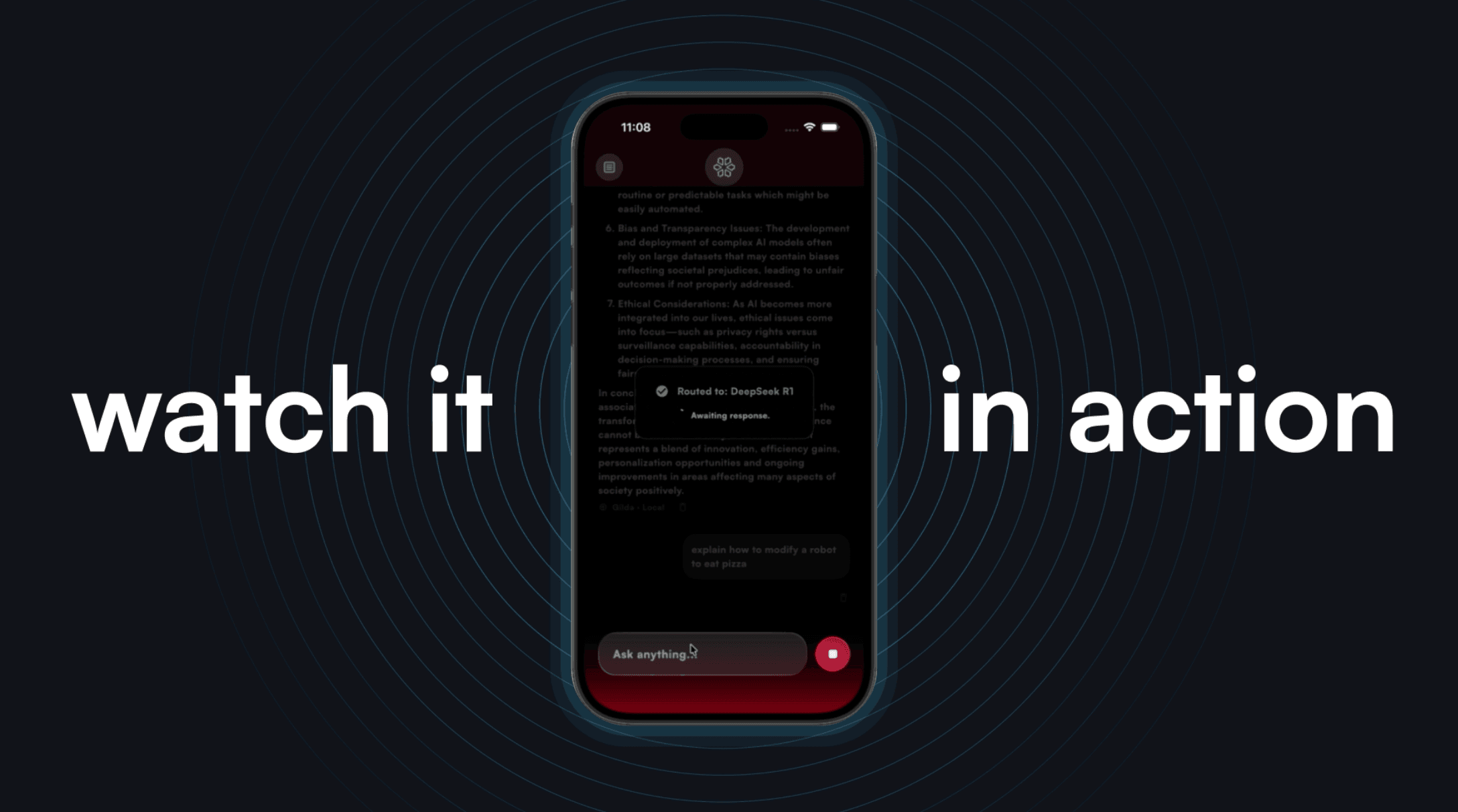

When you ask your iPhone or laptop a question, CompactifAI Router evaluates it. If it is simple enough, the local Llama 3.1 8B Slim answers instantly. If it requires heavy reasoning or access to global data, sends it to DeepSeek R1 Slim in the cloud. The response returns seamlessly, giving the impression of one unified AI. Even when airplane Wi-Fi fades in and out, the system adapts. You never lose your AI assistant, because your AI never leaves you.

A New Standard for AI Efficiency

This is not just a neat demo. It is a glimpse at the next paradigm of AI deployment, one that makes models smarter, safer, and more personal. Privacy by design ensures your data stays on your device for simple tasks. Latency-aware intelligence allows CompactifAI to dynamically decide when to go local versus remote.

But efficiency is not only about performance it is about cost. By routing only complex queries to the cloud, CompactifAI dramatically reduces the number of high-cost inference calls. Routine, lightweight tasks stay local, requiring no data transfer, no server time, and no cloud GPU usage. This hybrid strategy lowers operational costs for both individuals and enterprises, without sacrificing accuracy or responsiveness. The outcome is a leaner, greener, and more affordable AI powerful when needed, frugal when it can be.

CompactifAI’s orchestration shines as the router hands off a simple query to the local Llama 3.1 8B Slim and routes a complex one to DeepSeek R1 Slim through unstable Wi-Fi, proving that AI can think locally and globally at once. The cloud goes dark, but your AI does not.

CompactifAI shows that the next AI revolution is not about bigger models, it is about smarter collaboration between them. We are moving toward an era where your phone, your laptop, and the cloud all become parts of one distributed intelligence. At Multiverse Computing, we call this philosophy AI Unplugged.

Watch the Demo: “AI, Unplugged”

multiversecomputing.com/compactifai